Google parent Alphabet chair John Hennessy

By Huixia Sun

BEIJING, December 10 (TMTPOST) -- John Hennessy, the chair of Google parent Alphabet Inc., has asserted that dramatic breakthroughs in deep learning and machine learning are the new driver for the computer industry in the next two decades.

“We’ve come to an interesting time in the computer industry… will have a whole new driver and that driver is created by the dramatic breakthroughs that we’ve seen in deep learning and machine learning. I think this is going to make for really interesting next 20 years,” Hennessy, a former president of Stanford University, told the online audience during the 2021 T-EDGE Conference held in Beijing.

In a keynote speech delivered at the international event jointly organized by TMTPost Group, Daxing Industry Promotion Center and China New Media Development Zone, Hennessy, a veteran of the computer industry, shared his insights into past developments and future trends of the industry as well as likely solutions to pressing challenges right now.

In a simple language, he capsulated the history of the computer industry by pinpointing two major turning points, the former of which was the advent of personal computers and microprocessors.

From the 1960s to the 1980s, computer companies were largely vertically integrated ones, he said, citing IBM as an example. They did “everything”, including chips, discs, applications and databases.

Following the introduction of personal computers, a vertically organized industry was transformed into a horizontally organized industry. “We had silicon manufacturers. Intel, AMD, Fairchild and Motorola were doing processors. We had a company like TSMC, making chips for others… and Microsoft then came along and did OS and compilers on top of that. And companies like Oracle came along and built their applications, databases and other applications on top of that,” he went on to talk about the changes that took place in the late 1980s and the 1990s.

Now with dramatic breakthroughs in deep learning and machine learning, “we"re at a real turning point at this point in the history of computing,” he declared.

He explained that with the breakthroughs in deep learning and machine learning, general-purpose processors are going to remain important but they will be less centric. With the fastest, most important applications, the domain-specific processor will begin to play a key role. “So rather than perhaps so much horizontal, we will see again a more vertical integration in the computing industry. We are optimizing in a different way from we had in the past,” he said.

He used a security camera as an example to explain the combination of domain-specific software and optimized hardware in a domain-specific architecture. In a specific field, lots of very specialized processors will be used for addressing one particular problem. The processor in a security camera is going to have a very limited use. The key is to optimize power and efficiency in that key use and costs. “Thus a different kind of integration is emerging now and Microsoft, Google and Apple are all focusing on this,” he said.

He also took the Apple M1 as an example to illustrate the new trend. “If you look at the Apple M1, it"s a processor designed by Apple with a deep understanding of the applications that are likely to run on that processor. So they have a special purpose graphics processor; they have a special-purpose machine learning domain accelerator on there; and then they have multiple cores. But even the cores are not completely homogeneous. Some are slow low-power cores, and some are high-speed high-performance higher-power cores. So we see a completely different design approach, with a lot more co-design and vertical integration,” he said.

“Era of Dark Silicon” and Domain-Specific Co-DesignHennessey’s 30-minute speech also highlighted recent breakthroughs in deep learning and machine learning, including image recognition used for self-driving cars and medical diagnosis, protein folding and natural language translation. He gave the reasons why artificial intelligence (AI) suddenly made significant progress in the past few years although the concept has existed for about 60 years.

He attributed the big leaps to two major developments: the availability of massive data and massive computational capability.

“The Internet is a treasure trove of data that can be used for training. ImageNet was a critical tool for training image recognition. Today, close to 100,000 objects are on the ImageNet and more than 1,000 images per object, enough to train image recognition system really well,” he said.

The huge computational capability is mainly derived from large data centers and cloud-based computing, he said, adding that training takes hours and hours using thousands of specialized processors. “We simply didn"t have this capability earlier. So that was crucial to solving the training problem.”

However, the computing capability cannot increase at a fast speed forever. It was predicted by Gordon Moore in 1975 that semiconductor density would continue to grow quickly and double every two years. But a diverge from that predicted course started in 2000, he noted in the speech.

“We are in the era of dark silicon where multicores often slow down or shut off to prevent overheating and that overheating comes from power consumption,” he said.

Hennessy proposed a new solution to the challenge. It may go in three directions. The first direction is software centric mechanisms that focus on improving the efficiency of software; the second is the hardware-centric approach and the third is the combination of the two.

“This approach is called domain specific architectures or domain specific accelerator. The idea is to just do a few tasks but do those tasks extremely well. We"ve already seen examples of this in graphics for example or modem that"s inside your cell phone. Those are special purpose architectures that use intensive computational techniques,” he said.

“The good news here is that deep learning is a broadly applicable technology. It"s the new programming model, programming with data, rather than writing massive amounts of highly specialized code. Use data to train deep learning model to detect that kind of specialized circumstance in the data,” Hennessy explained, implying that the wide applicability of deep learning has the potential to circumvent the problems of power efficiency and transistor growth.

Hennessy has been chair of Alphabet Inc. since February 2018. Prior to that, he was an independent director at Google and Alphabet from 2007. He was Stanford University’s tenth president from 2002 until his retirement in 2016, leading the university to become more academically competitive in a rivalry with Ivy League schools including Harvard and Yale. He joined Stanford’s faculty in 1977 as an assistant professor of electrical engineering.

He is also a laureate of the 2017 ACM A.M. Turing Award, along with retired UC Berkley professor David A. Patterson.

In addition to his outstanding academic and leadership achievements, Hennessy co-founded chip design startup Mips Computer Systems, which was acquired by Silicon Graphics International in 1992.

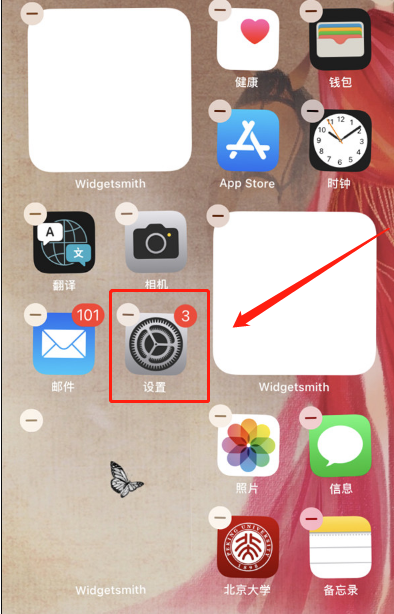

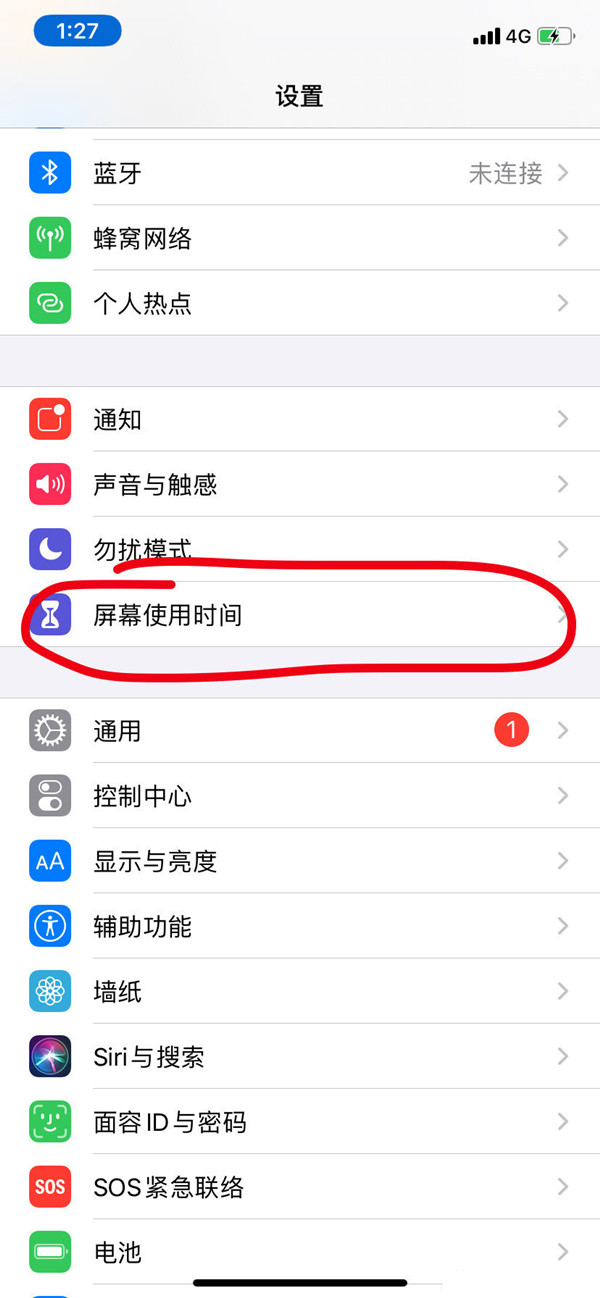

营业执照公示信息

营业执照公示信息